Cifar10

On this page

Cifar10#

This page gives a quick introduction to OpenPifPaf’s Cifar10 plugin that is part of openpifpaf.plugins.

It demonstrates the plugin architecture.

There already is a nice dataset for CIFAR10 in torchvision and a related PyTorch tutorial.

The plugin adds a DataModule that uses this dataset.

Let’s start with them setup for this notebook and registering all available OpenPifPaf plugins:

print(openpifpaf.plugin.REGISTERED.keys())

dict_keys(['openpifpaf.plugins.animalpose', 'openpifpaf.plugins.apollocar3d', 'openpifpaf.plugins.cifar10', 'openpifpaf.plugins.coco', 'openpifpaf.plugins.crowdpose', 'openpifpaf.plugins.nuscenes', 'openpifpaf.plugins.posetrack', 'openpifpaf.plugins.wholebody'])

Next, we configure and instantiate the Cifar10 datamodule and look at the configured head metas:

# configure

openpifpaf.plugins.cifar10.datamodule.Cifar10.debug = True

openpifpaf.plugins.cifar10.datamodule.Cifar10.batch_size = 1

# instantiate and inspect

datamodule = openpifpaf.plugins.cifar10.datamodule.Cifar10()

datamodule.set_loader_workers(0) # no multi-processing to see debug outputs in main thread

datamodule.head_metas

[CifDet(name='cifdet', dataset='cifar10', head_index=None, base_stride=None, upsample_stride=1, categories=('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck'), training_weights=None)]

We see here that CIFAR10 is being treated as a detection dataset (CifDet) and has 10 categories.

To create a network, we use the factory() function that takes the name of the base network cifar10net and the list of head metas.

net = openpifpaf.network.Factory(base_name='cifar10net').factory(head_metas=datamodule.head_metas)

We can inspect the training data that is returned from datamodule.train_loader():

# configure visualization

openpifpaf.visualizer.Base.set_all_indices(['cifdet:9:regression']) # category 9 = truck

# Create a wrapper for a data loader that iterates over a set of matplotlib axes.

# The only purpose is to set a different matplotlib axis before each call to

# retrieve the next image from the data_loader so that it produces multiple

# debug images in one canvas side-by-side.

def loop_over_axes(axes, data_loader):

previous_common_ax = openpifpaf.visualizer.Base.common_ax

train_loader_iter = iter(data_loader)

for ax in axes.reshape(-1):

openpifpaf.visualizer.Base.common_ax = ax

yield next(train_loader_iter, None)

openpifpaf.visualizer.Base.common_ax = previous_common_ax

# create a canvas and loop over the first few entries in the training data

with openpifpaf.show.canvas(ncols=6, nrows=3, figsize=(10, 5)) as axs:

for images, targets, meta in loop_over_axes(axs, datamodule.train_loader()):

pass

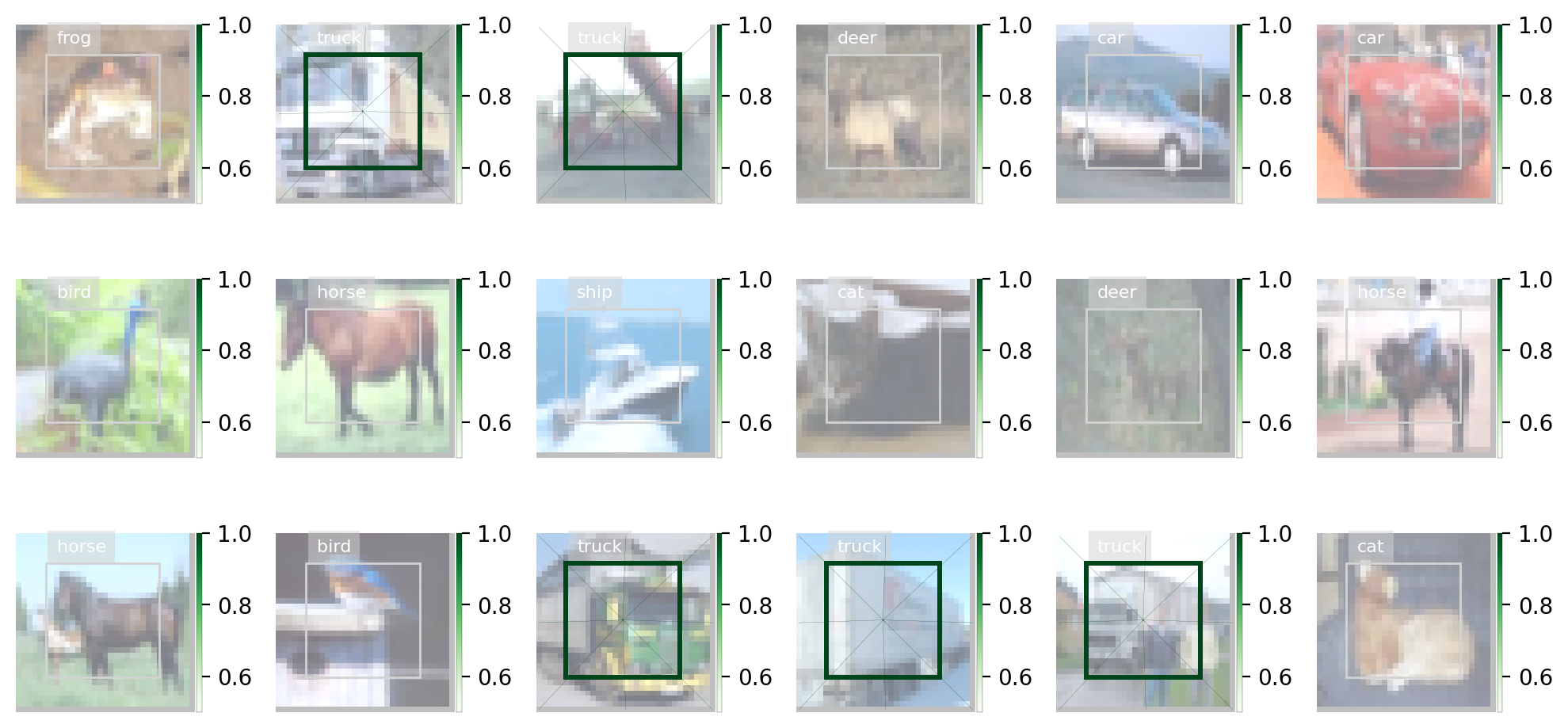

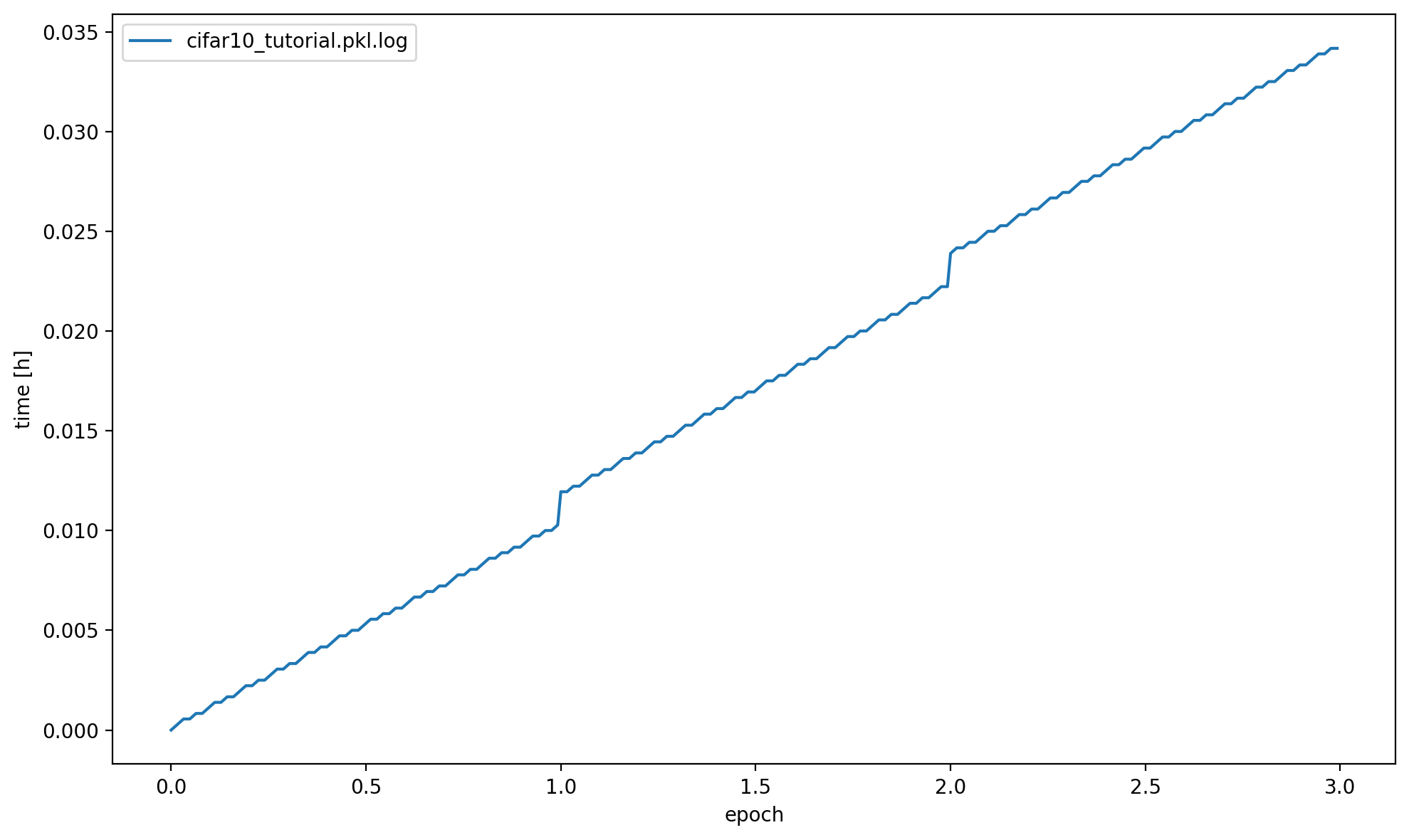

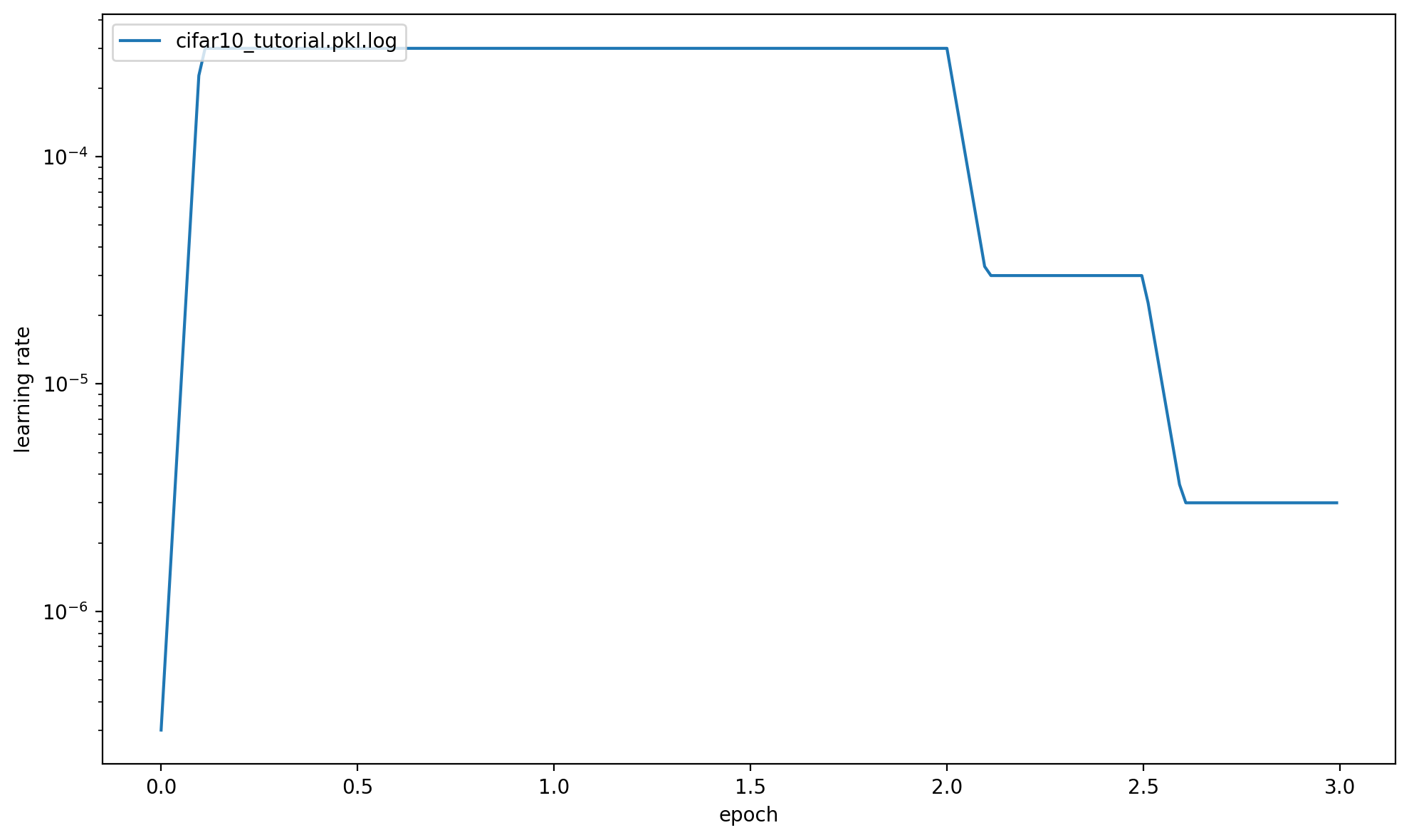

Training#

We train a very small network, cifar10net, for only one epoch. Afterwards, we will investigate its predictions.

%%bash

python -m openpifpaf.train \

--dataset=cifar10 --basenet=cifar10net --log-interval=50 \

--epochs=3 --lr=0.0003 --momentum=0.95 --batch-size=16 \

--lr-warm-up-epochs=0.1 --lr-decay 2.0 2.5 --lr-decay-epochs=0.1 \

--loader-workers=2 --output=cifar10_tutorial.pkl

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

INFO:__main__:Running Python 3.10.13

INFO:__main__:Running PyTorch 2.2.1+cpu

INFO:openpifpaf.network.basenetworks:cifar10net: stride = 16, output features = 128

INFO:openpifpaf.network.losses.multi_head:multihead loss: ['cifar10.cifdet.c', 'cifar10.cifdet.vec', 'cifar10.cifdet.scales'], [1.0, 1.0, 1.0]

INFO:openpifpaf.logger:{'type': 'process', 'argv': ['/opt/hostedtoolcache/Python/3.10.13/x64/lib/python3.10/site-packages/openpifpaf/train.py', '--dataset=cifar10', '--basenet=cifar10net', '--log-interval=50', '--epochs=3', '--lr=0.0003', '--momentum=0.95', '--batch-size=16', '--lr-warm-up-epochs=0.1', '--lr-decay', '2.0', '2.5', '--lr-decay-epochs=0.1', '--loader-workers=2', '--output=cifar10_tutorial.pkl'], 'args': {'output': 'cifar10_tutorial.pkl', 'disable_cuda': False, 'ddp': False, 'local_rank': None, 'sync_batchnorm': True, 'resume_training': None, 'quiet': False, 'debug': False, 'log_stats': False, 'xcit_out_channels': None, 'xcit_out_maxpool': False, 'xcit_pretrained': True, 'swin_drop_path_rate': 0.2, 'swin_input_upsample': False, 'swin_use_fpn': False, 'swin_fpn_out_channels': None, 'swin_fpn_level': 3, 'swin_pretrained': True, 'shufflenetv2_pretrained': True, 'squeezenet_pretrained': True, 'shufflenetv2k_input_conv2_stride': 0, 'shufflenetv2k_input_conv2_outchannels': None, 'shufflenetv2k_stage4_dilation': 1, 'shufflenetv2k_kernel': 5, 'shufflenetv2k_conv5_as_stage': False, 'shufflenetv2k_instance_norm': False, 'shufflenetv2k_group_norm': False, 'shufflenetv2k_leaky_relu': False, 'mobilenetv2_pretrained': True, 'mobilenetv3_pretrained': True, 'resnet_pretrained': True, 'resnet_pool0_stride': 0, 'resnet_input_conv_stride': 2, 'resnet_input_conv2_stride': 0, 'resnet_block5_dilation': 1, 'resnet_remove_last_block': False, 'hrformer_scale_level': 0, 'hrformer_pretrained': True, 'clipconvnext_pretrained': True, 'convnextv2_pretrained': True, 'cf4_dropout': 0.0, 'cf4_inplace_ops': True, 'checkpoint': None, 'basenet': 'cifar10net', 'cross_talk': 0.0, 'download_progress': True, 'head_consolidation': 'filter_and_extend', 'lambdas': None, 'component_lambdas': None, 'auto_tune_mtl': False, 'auto_tune_mtl_variance': False, 'task_sparsity_weight': 0.0, 'loss_prescale': 1.0, 'regression_loss': 'laplace', 'bce_total_soft_clamp': None, 'soft_clamp': 5.0, 'r_smooth': 0.0, 'laplace_soft_clamp': 5.0, 'background_weight': 1.0, 'focal_alpha': 0.5, 'focal_gamma': 1.0, 'focal_detach': False, 'focal_clamp': True, 'bce_min': 0.0, 'bce_soft_clamp': 5.0, 'bce_background_clamp': -15.0, 'b_scale': 1.0, 'scale_log': False, 'scale_soft_clamp': 5.0, 'epochs': 3, 'train_batches': None, 'val_batches': None, 'clip_grad_norm': 0.0, 'clip_grad_value': 0.0, 'log_interval': 50, 'val_interval': 1, 'stride_apply': 1, 'fix_batch_norm': False, 'ema': 0.01, 'profile': None, 'cif_side_length': 4, 'caf_min_size': 3, 'caf_fixed_size': False, 'caf_aspect_ratio': 0.0, 'encoder_suppress_selfhidden': True, 'encoder_suppress_invisible': False, 'encoder_suppress_collision': False, 'momentum': 0.95, 'beta2': 0.999, 'adam_eps': 1e-06, 'nesterov': True, 'weight_decay': 0.0, 'adam': False, 'adamw': False, 'amsgrad': False, 'lr': 0.0003, 'lr_decay_type': 'step', 'lr_decay': [2.0, 2.5], 'lr_decay_factor': 0.1, 'lr_decay_epochs': 0.1, 'lr_warm_up_type': 'exp', 'lr_warm_up_start_epoch': 0, 'lr_warm_up_epochs': 0.1, 'lr_warm_up_factor': 0.001, 'lr_warm_restarts': [], 'lr_warm_restart_duration': 0.5, 'dataset': 'cifar10', 'loader_workers': 2, 'batch_size': 16, 'dataset_weights': None, 'animal_train_annotations': 'data-animalpose/annotations/animal_keypoints_20_train.json', 'animal_val_annotations': 'data-animalpose/annotations/animal_keypoints_20_val.json', 'animal_train_image_dir': 'data-animalpose/images/train/', 'animal_val_image_dir': 'data-animalpose/images/val/', 'animal_square_edge': 513, 'animal_extended_scale': False, 'animal_orientation_invariant': 0.0, 'animal_blur': 0.0, 'animal_augmentation': True, 'animal_rescale_images': 1.0, 'animal_upsample': 1, 'animal_min_kp_anns': 1, 'animal_bmin': 1, 'animal_eval_test2017': False, 'animal_eval_testdev2017': False, 'animal_eval_annotation_filter': True, 'animal_eval_long_edge': 0, 'animal_eval_extended_scale': False, 'animal_eval_orientation_invariant': 0.0, 'apollo_train_annotations': 'data-apollocar3d/annotations/apollo_keypoints_66_train.json', 'apollo_val_annotations': 'data-apollocar3d/annotations/apollo_keypoints_66_val.json', 'apollo_train_image_dir': 'data-apollocar3d/images/train/', 'apollo_val_image_dir': 'data-apollocar3d/images/val/', 'apollo_square_edge': 513, 'apollo_extended_scale': False, 'apollo_orientation_invariant': 0.0, 'apollo_blur': 0.0, 'apollo_augmentation': True, 'apollo_rescale_images': 1.0, 'apollo_upsample': 1, 'apollo_min_kp_anns': 1, 'apollo_bmin': 1, 'apollo_apply_local_centrality': False, 'apollo_eval_annotation_filter': True, 'apollo_eval_long_edge': 0, 'apollo_eval_extended_scale': False, 'apollo_eval_orientation_invariant': 0.0, 'apollo_use_24_kps': False, 'cifar10_root_dir': 'data-cifar10/', 'cifar10_download': False, 'cocodet_train_annotations': 'data-mscoco/annotations/instances_train2017.json', 'cocodet_val_annotations': 'data-mscoco/annotations/instances_val2017.json', 'cocodet_train_image_dir': 'data-mscoco/images/train2017/', 'cocodet_val_image_dir': 'data-mscoco/images/val2017/', 'cocodet_square_edge': 513, 'cocodet_extended_scale': False, 'cocodet_orientation_invariant': 0.0, 'cocodet_blur': 0.0, 'cocodet_augmentation': True, 'cocodet_rescale_images': 1.0, 'cocodet_upsample': 1, 'cocokp_train_annotations': 'data-mscoco/annotations/person_keypoints_train2017.json', 'cocokp_val_annotations': 'data-mscoco/annotations/person_keypoints_val2017.json', 'cocokp_train_image_dir': 'data-mscoco/images/train2017/', 'cocokp_val_image_dir': 'data-mscoco/images/val2017/', 'cocokp_square_edge': 385, 'cocokp_with_dense': False, 'cocokp_extended_scale': False, 'cocokp_orientation_invariant': 0.0, 'cocokp_blur': 0.0, 'cocokp_augmentation': True, 'cocokp_rescale_images': 1.0, 'cocokp_upsample': 1, 'cocokp_min_kp_anns': 1, 'cocokp_bmin': 0.1, 'cocokp_eval_test2017': False, 'cocokp_eval_testdev2017': False, 'coco_eval_annotation_filter': True, 'coco_eval_long_edge': 641, 'coco_eval_extended_scale': False, 'coco_eval_orientation_invariant': 0.0, 'crowdpose_train_annotations': 'data-crowdpose/json/crowdpose_train.json', 'crowdpose_val_annotations': 'data-crowdpose/json/crowdpose_val.json', 'crowdpose_image_dir': 'data-crowdpose/images/', 'crowdpose_square_edge': 385, 'crowdpose_extended_scale': False, 'crowdpose_orientation_invariant': 0.0, 'crowdpose_augmentation': True, 'crowdpose_rescale_images': 1.0, 'crowdpose_upsample': 1, 'crowdpose_min_kp_anns': 1, 'crowdpose_eval_test': False, 'crowdpose_eval_long_edge': 641, 'crowdpose_eval_extended_scale': False, 'crowdpose_eval_orientation_invariant': 0.0, 'crowdpose_index': None, 'nuscenes_train_annotations': '../../../NuScenes/mscoco_style_annotations/nuimages_v1.0-train.json', 'nuscenes_val_annotations': '../../../NuScenes/mscoco_style_annotations/nuimages_v1.0-val.json', 'nuscenes_train_image_dir': '../../../NuScenes/nuimages-v1.0-all-samples', 'nuscenes_val_image_dir': '../../../NuScenes/nuimages-v1.0-all-samples', 'nuscenes_square_edge': 513, 'nuscenes_extended_scale': False, 'nuscenes_orientation_invariant': 0.0, 'nuscenes_blur': 0.0, 'nuscenes_augmentation': True, 'nuscenes_rescale_images': 1.0, 'nuscenes_upsample': 1, 'posetrack2018_train_annotations': 'data-posetrack2018/annotations/train/*.json', 'posetrack2018_val_annotations': 'data-posetrack2018/annotations/val/*.json', 'posetrack2018_eval_annotations': 'data-posetrack2018/annotations/val/*.json', 'posetrack2018_data_root': 'data-posetrack2018', 'posetrack_square_edge': 385, 'posetrack_with_dense': False, 'posetrack_augmentation': True, 'posetrack_rescale_images': 1.0, 'posetrack_upsample': 1, 'posetrack_min_kp_anns': 1, 'posetrack_bmin': 0.1, 'posetrack_sample_pairing': 0.0, 'posetrack_image_augmentations': 0.0, 'posetrack_max_shift': 30.0, 'posetrack_eval_long_edge': 801, 'posetrack_eval_extended_scale': False, 'posetrack_eval_orientation_invariant': 0.0, 'posetrack_ablation_without_tcaf': False, 'posetrack2017_eval_annotations': 'data-posetrack2017/annotations/val/*.json', 'posetrack2017_data_root': 'data-posetrack2017', 'cocokpst_max_shift': 30.0, 'wholebody_train_annotations': 'data-mscoco/annotations/person_keypoints_train2017_wholebody_pifpaf_style.json', 'wholebody_val_annotations': 'data-mscoco/annotations/coco_wholebody_val_v1.0.json', 'wholebody_train_image_dir': 'data-mscoco/images/train2017/', 'wholebody_val_image_dir': 'data-mscoco/images/val2017', 'wholebody_square_edge': 385, 'wholebody_extended_scale': False, 'wholebody_orientation_invariant': 0.0, 'wholebody_blur': 0.0, 'wholebody_augmentation': True, 'wholebody_rescale_images': 1.0, 'wholebody_upsample': 1, 'wholebody_min_kp_anns': 1, 'wholebody_bmin': 1.0, 'wholebody_apply_local_centrality': False, 'wholebody_eval_test2017': False, 'wholebody_eval_testdev2017': False, 'wholebody_eval_annotation_filter': True, 'wholebody_eval_long_edge': 641, 'wholebody_eval_extended_scale': False, 'wholebody_eval_orientation_invariant': 0.0, 'save_all': None, 'show': False, 'image_width': None, 'image_height': None, 'image_dpi_factor': 2.0, 'image_min_dpi': 50.0, 'show_file_extension': 'jpeg', 'textbox_alpha': 0.5, 'text_color': 'white', 'font_size': 8, 'monocolor_connections': False, 'line_width': None, 'skeleton_solid_threshold': 0.5, 'show_box': False, 'white_overlay': False, 'show_joint_scales': False, 'show_joint_confidences': False, 'show_decoding_order': False, 'show_frontier_order': False, 'show_only_decoded_connections': False, 'video_fps': 10, 'video_dpi': 100, 'debug_indices': [], 'device': device(type='cpu'), 'pin_memory': False}, 'version': '0.14.2+1.g9ac6c0f', 'plugin_versions': {}, 'hostname': 'fv-az1247-578'}

INFO:openpifpaf.optimize:SGD optimizer

INFO:openpifpaf.optimize:training batches per epoch = 3125

INFO:openpifpaf.network.trainer:{'type': 'config', 'field_names': ['cifar10.cifdet.c', 'cifar10.cifdet.vec', 'cifar10.cifdet.scales']}

INFO:openpifpaf.network.trainer:model written: cifar10_tutorial.pkl.epoch000

INFO:openpifpaf.network.trainer:training state written: cifar10_tutorial.pkl.optim.epoch000

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 0, 'n_batches': 3125, 'time': 0.025, 'data_time': 0.103, 'lr': 3e-07, 'loss': 68.932, 'head_losses': [1.783, 67.149, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 50, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 9.1e-07, 'loss': 69.134, 'head_losses': [1.708, 67.426, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 100, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 2.74e-06, 'loss': 68.905, 'head_losses': [1.777, 67.128, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 150, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.004, 'lr': 8.26e-06, 'loss': 68.659, 'head_losses': [1.787, 66.872, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 200, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.001, 'lr': 2.495e-05, 'loss': 68.198, 'head_losses': [1.825, 66.373, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 250, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 7.536e-05, 'loss': 66.79, 'head_losses': [1.882, 64.909, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 300, 'n_batches': 3125, 'time': 0.013, 'data_time': 0.001, 'lr': 0.00022757, 'loss': 59.35, 'head_losses': [3.124, 56.226, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 350, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': 28.779, 'head_losses': [-0.678, 29.457, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 400, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': 2.78, 'head_losses': [-6.538, 9.318, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 450, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': 1.601, 'head_losses': [-7.875, 9.476, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 500, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -5.4, 'head_losses': [-8.175, 2.775, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 550, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -2.989, 'head_losses': [-8.807, 5.818, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 600, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -7.015, 'head_losses': [-8.955, 1.94, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 650, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -7.28, 'head_losses': [-8.905, 1.625, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 700, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -6.677, 'head_losses': [-9.033, 2.357, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 750, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.675, 'head_losses': [-9.114, 0.439, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 800, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.001, 'lr': 0.0003, 'loss': -4.795, 'head_losses': [-8.926, 4.131, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 850, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.186, 'head_losses': [-9.042, 0.856, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 900, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.696, 'head_losses': [-9.245, 0.549, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 950, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.398, 'head_losses': [-9.366, 0.967, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1000, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -7.055, 'head_losses': [-9.333, 2.279, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1050, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -7.042, 'head_losses': [-9.66, 2.618, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1100, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.387, 'head_losses': [-9.466, 1.079, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1150, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.816, 'head_losses': [-9.74, 0.925, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1200, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -6.137, 'head_losses': [-9.294, 3.157, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1250, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.752, 'head_losses': [-9.659, 0.907, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1300, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.252, 'head_losses': [-9.825, 0.573, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1350, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.264, 'head_losses': [-9.61, 1.346, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1400, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -7.973, 'head_losses': [-9.791, 1.818, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1450, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.845, 'head_losses': [-9.686, 0.841, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1500, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.001, 'lr': 0.0003, 'loss': -7.813, 'head_losses': [-9.955, 2.143, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1550, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.004, 'lr': 0.0003, 'loss': -8.403, 'head_losses': [-9.377, 0.974, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1600, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.876, 'head_losses': [-9.477, 0.601, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1650, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.74, 'head_losses': [-10.056, 0.316, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1700, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.715, 'head_losses': [-10.093, 0.378, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1750, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.206, 'head_losses': [-9.856, 0.65, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1800, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.126, 'head_losses': [-10.06, 0.933, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1850, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.925, 'head_losses': [-10.238, 0.314, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1900, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.246, 'head_losses': [-9.619, 1.373, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1950, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.02, 'head_losses': [-9.596, 0.576, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2000, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.005, 'lr': 0.0003, 'loss': -10.011, 'head_losses': [-10.127, 0.116, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2050, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.532, 'head_losses': [-10.147, 0.615, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2100, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.671, 'head_losses': [-10.011, 0.34, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2150, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.517, 'head_losses': [-9.908, 0.39, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2200, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.215, 'head_losses': [-9.911, 0.696, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2250, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.14, 'head_losses': [-9.691, 1.552, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2300, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.578, 'head_losses': [-10.075, 0.497, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2350, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.672, 'head_losses': [-9.903, 0.231, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2400, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.24, 'head_losses': [-10.152, 0.912, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2450, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -4.724, 'head_losses': [-9.411, 4.688, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2500, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.452, 'head_losses': [-9.887, 0.435, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2550, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.593, 'head_losses': [-9.607, 1.014, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2600, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.062, 'head_losses': [-10.034, 1.972, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2650, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.341, 'head_losses': [-9.787, 0.446, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2700, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.166, 'head_losses': [-9.954, 0.788, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2750, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.919, 'head_losses': [-10.09, 0.172, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2800, 'n_batches': 3125, 'time': 0.013, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.635, 'head_losses': [-9.994, 0.359, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2850, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.664, 'head_losses': [-10.154, 1.491, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2900, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.663, 'head_losses': [-10.521, 0.858, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2950, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.407, 'head_losses': [-9.67, 0.263, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 3000, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.074, 'head_losses': [-10.714, 0.64, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 3050, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -7.506, 'head_losses': [-10.074, 2.568, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 3100, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.001, 'lr': 0.0003, 'loss': -6.657, 'head_losses': [-8.986, 2.329, 0.0]}

INFO:openpifpaf.network.trainer:applying ema

INFO:openpifpaf.network.trainer:{'type': 'train-epoch', 'epoch': 1, 'loss': 0.36204, 'head_losses': [-8.21932, 8.58136, 0.0], 'time': 36.9, 'n_clipped_grad': 0, 'max_norm': 0.0}

INFO:openpifpaf.network.trainer:model written: cifar10_tutorial.pkl.epoch001

INFO:openpifpaf.network.trainer:training state written: cifar10_tutorial.pkl.optim.epoch001

INFO:openpifpaf.network.trainer:{'type': 'val-epoch', 'epoch': 1, 'loss': -10.30589, 'head_losses': [-10.23522, -0.07067, 0.0], 'time': 5.2}

INFO:openpifpaf.network.trainer:restoring params from before ema

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 0, 'n_batches': 3125, 'time': 0.02, 'data_time': 0.059, 'lr': 0.0003, 'loss': -10.01, 'head_losses': [-10.499, 0.489, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 50, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.581, 'head_losses': [-9.892, 0.311, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 100, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.041, 'head_losses': [-9.443, 0.402, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 150, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.095, 'head_losses': [-9.838, 0.743, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 200, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.533, 'head_losses': [-10.172, 0.639, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 250, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.974, 'head_losses': [-10.473, 0.499, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 300, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.046, 'head_losses': [-10.52, 0.474, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 350, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.564, 'head_losses': [-10.363, 0.799, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 400, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.868, 'head_losses': [-10.188, 0.32, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 450, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.477, 'head_losses': [-10.754, 1.277, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 500, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.788, 'head_losses': [-9.767, 0.979, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 550, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.376, 'head_losses': [-9.9, 0.524, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 600, 'n_batches': 3125, 'time': 0.013, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.307, 'head_losses': [-10.582, 1.275, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 650, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.767, 'head_losses': [-10.281, 0.514, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 700, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.285, 'head_losses': [-10.562, 0.277, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 750, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.211, 'head_losses': [-9.559, 0.348, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 800, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.666, 'head_losses': [-10.148, 0.482, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 850, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.698, 'head_losses': [-10.344, 0.646, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 900, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -7.937, 'head_losses': [-10.635, 2.698, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 950, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.419, 'head_losses': [-9.824, 0.406, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1000, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -9.695, 'head_losses': [-10.128, 0.433, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1050, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -10.102, 'head_losses': [-10.516, 0.415, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1100, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.507, 'head_losses': [-10.518, 0.01, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1150, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -10.033, 'head_losses': [-10.224, 0.192, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1200, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.179, 'head_losses': [-10.416, 0.236, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1250, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -10.006, 'head_losses': [-10.123, 0.117, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1300, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -10.71, 'head_losses': [-10.903, 0.193, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1350, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -8.911, 'head_losses': [-9.272, 0.361, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1400, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.875, 'head_losses': [-10.08, 0.205, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1450, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.636, 'head_losses': [-10.158, 1.522, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1500, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.2, 'head_losses': [-10.301, 0.102, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1550, 'n_batches': 3125, 'time': 0.013, 'data_time': 0.001, 'lr': 0.0003, 'loss': -10.104, 'head_losses': [-10.292, 0.188, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1600, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.945, 'head_losses': [-10.716, 1.771, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1650, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.61, 'head_losses': [-10.351, 0.741, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1700, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.842, 'head_losses': [-10.959, 0.117, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1750, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -10.791, 'head_losses': [-10.855, 0.064, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1800, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.503, 'head_losses': [-10.599, 0.096, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1850, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.597, 'head_losses': [-10.658, 0.061, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1900, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.702, 'head_losses': [-10.517, 0.814, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 1950, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -8.774, 'head_losses': [-9.798, 1.025, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2000, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.128, 'head_losses': [-10.354, 0.226, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2050, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.666, 'head_losses': [-10.13, 0.464, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2100, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.141, 'head_losses': [-10.575, 0.435, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2150, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.615, 'head_losses': [-10.679, 0.064, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2200, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.253, 'head_losses': [-10.289, 0.036, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2250, 'n_batches': 3125, 'time': 0.016, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.414, 'head_losses': [-10.687, 0.273, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2300, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.48, 'head_losses': [-9.872, 0.392, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2350, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.982, 'head_losses': [-10.257, 0.275, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2400, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.003, 'lr': 0.0003, 'loss': -10.352, 'head_losses': [-10.621, 0.269, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2450, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.607, 'head_losses': [-10.747, 0.14, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2500, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.997, 'head_losses': [-10.042, 0.046, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2550, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.845, 'head_losses': [-10.068, 0.222, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2600, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.175, 'head_losses': [-10.805, 1.63, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2650, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.157, 'head_losses': [-10.419, 0.262, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2700, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -9.515, 'head_losses': [-9.563, 0.048, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2750, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.487, 'head_losses': [-10.742, 0.255, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2800, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.458, 'head_losses': [-10.658, 0.2, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2850, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.66, 'head_losses': [-10.842, 0.182, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2900, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 0.0003, 'loss': -10.157, 'head_losses': [-10.609, 0.453, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 2950, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.115, 'head_losses': [-10.192, 0.076, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 3000, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.009, 'lr': 0.0003, 'loss': -10.365, 'head_losses': [-10.487, 0.121, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 3050, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.575, 'head_losses': [-10.695, 0.119, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 1, 'batch': 3100, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 0.0003, 'loss': -10.152, 'head_losses': [-10.527, 0.374, 0.0]}

INFO:openpifpaf.network.trainer:applying ema

INFO:openpifpaf.network.trainer:{'type': 'train-epoch', 'epoch': 2, 'loss': -9.91726, 'head_losses': [-10.35309, 0.43583, 0.0], 'time': 38.1, 'n_clipped_grad': 0, 'max_norm': 0.0}

INFO:openpifpaf.network.trainer:model written: cifar10_tutorial.pkl.epoch002

INFO:openpifpaf.network.trainer:training state written: cifar10_tutorial.pkl.optim.epoch002

INFO:openpifpaf.network.trainer:{'type': 'val-epoch', 'epoch': 2, 'loss': -10.76985, 'head_losses': [-10.62396, -0.14589, 0.0], 'time': 5.3}

INFO:openpifpaf.network.trainer:restoring params from before ema

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 0, 'n_batches': 3125, 'time': 0.017, 'data_time': 0.059, 'lr': 0.0003, 'loss': -10.603, 'head_losses': [-10.687, 0.084, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 50, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.00020755, 'loss': -10.361, 'head_losses': [-10.269, -0.092, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 100, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 0.00014359, 'loss': -10.468, 'head_losses': [-10.352, -0.116, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 150, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 9.934e-05, 'loss': -11.084, 'head_losses': [-10.949, -0.135, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 200, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 6.873e-05, 'loss': -10.67, 'head_losses': [-10.52, -0.15, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 250, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 4.755e-05, 'loss': -10.733, 'head_losses': [-10.603, -0.13, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 300, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 3.289e-05, 'loss': -11.038, 'head_losses': [-10.863, -0.175, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 350, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.503, 'head_losses': [-10.353, -0.15, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 400, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.994, 'head_losses': [-10.813, -0.181, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 450, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.005, 'lr': 3e-05, 'loss': -10.524, 'head_losses': [-10.347, -0.177, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 500, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.849, 'head_losses': [-10.676, -0.173, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 550, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.386, 'head_losses': [-10.212, -0.174, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 600, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.564, 'head_losses': [-10.394, -0.17, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 650, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.99, 'head_losses': [-10.835, -0.155, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 700, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-05, 'loss': -11.027, 'head_losses': [-10.86, -0.166, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 750, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.811, 'head_losses': [-10.627, -0.183, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 800, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.525, 'head_losses': [-10.397, -0.128, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 850, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -11.334, 'head_losses': [-11.162, -0.173, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 900, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.683, 'head_losses': [-10.512, -0.172, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 950, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -11.136, 'head_losses': [-10.955, -0.181, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1000, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.924, 'head_losses': [-10.739, -0.186, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1050, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.993, 'head_losses': [-10.804, -0.189, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1100, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.319, 'head_losses': [-10.234, -0.085, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1150, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.648, 'head_losses': [-10.47, -0.178, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1200, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.742, 'head_losses': [-10.585, -0.157, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1250, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.539, 'head_losses': [-10.351, -0.188, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1300, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.904, 'head_losses': [-10.72, -0.183, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1350, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 3e-05, 'loss': -9.798, 'head_losses': [-9.625, -0.173, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1400, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-05, 'loss': -10.41, 'head_losses': [-10.24, -0.17, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1450, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 3e-05, 'loss': -10.338, 'head_losses': [-10.157, -0.181, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1500, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 3e-05, 'loss': -11.256, 'head_losses': [-11.065, -0.192, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1550, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 3e-05, 'loss': -11.032, 'head_losses': [-10.847, -0.185, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1600, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 2.276e-05, 'loss': -10.855, 'head_losses': [-10.658, -0.197, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1650, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.002, 'lr': 1.574e-05, 'loss': -11.561, 'head_losses': [-11.369, -0.192, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1700, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 1.089e-05, 'loss': -11.242, 'head_losses': [-11.049, -0.193, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1750, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 7.54e-06, 'loss': -10.594, 'head_losses': [-10.414, -0.18, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1800, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 5.21e-06, 'loss': -10.471, 'head_losses': [-10.289, -0.181, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1850, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3.61e-06, 'loss': -11.061, 'head_losses': [-10.873, -0.188, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1900, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.731, 'head_losses': [-10.537, -0.193, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 1950, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.224, 'head_losses': [-10.072, -0.151, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2000, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 3e-06, 'loss': -11.288, 'head_losses': [-11.091, -0.197, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2050, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.863, 'head_losses': [-10.709, -0.154, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2100, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -11.098, 'head_losses': [-10.896, -0.202, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2150, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.676, 'head_losses': [-10.48, -0.196, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2200, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.638, 'head_losses': [-10.457, -0.181, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2250, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.34, 'head_losses': [-10.143, -0.197, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2300, 'n_batches': 3125, 'time': 0.01, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.322, 'head_losses': [-10.124, -0.198, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2350, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.853, 'head_losses': [-10.648, -0.205, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2400, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.772, 'head_losses': [-10.583, -0.189, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2450, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.881, 'head_losses': [-10.694, -0.187, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2500, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -11.122, 'head_losses': [-10.921, -0.201, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2550, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.579, 'head_losses': [-10.39, -0.19, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2600, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -11.422, 'head_losses': [-11.225, -0.198, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2650, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.738, 'head_losses': [-10.545, -0.193, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2700, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -11.171, 'head_losses': [-10.983, -0.189, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2750, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.558, 'head_losses': [-10.369, -0.189, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2800, 'n_batches': 3125, 'time': 0.009, 'data_time': 0.002, 'lr': 3e-06, 'loss': -11.28, 'head_losses': [-11.132, -0.147, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2850, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.709, 'head_losses': [-10.513, -0.196, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2900, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.001, 'lr': 3e-06, 'loss': -11.171, 'head_losses': [-10.999, -0.172, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 2950, 'n_batches': 3125, 'time': 0.011, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.788, 'head_losses': [-10.617, -0.17, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 3000, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.672, 'head_losses': [-10.488, -0.184, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 3050, 'n_batches': 3125, 'time': 0.008, 'data_time': 0.002, 'lr': 3e-06, 'loss': -10.841, 'head_losses': [-10.645, -0.196, 0.0]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 2, 'batch': 3100, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.853, 'head_losses': [-10.67, -0.183, 0.0]}

INFO:openpifpaf.network.trainer:applying ema

INFO:openpifpaf.network.trainer:{'type': 'train-epoch', 'epoch': 3, 'loss': -10.85519, 'head_losses': [-10.68342, -0.17177, 0.0], 'time': 37.8, 'n_clipped_grad': 0, 'max_norm': 0.0}

INFO:openpifpaf.network.trainer:model written: cifar10_tutorial.pkl.epoch003

INFO:openpifpaf.network.trainer:training state written: cifar10_tutorial.pkl.optim.epoch003

INFO:openpifpaf.network.trainer:{'type': 'val-epoch', 'epoch': 3, 'loss': -10.8963, 'head_losses': [-10.70909, -0.18721, 0.0], 'time': 5.3}

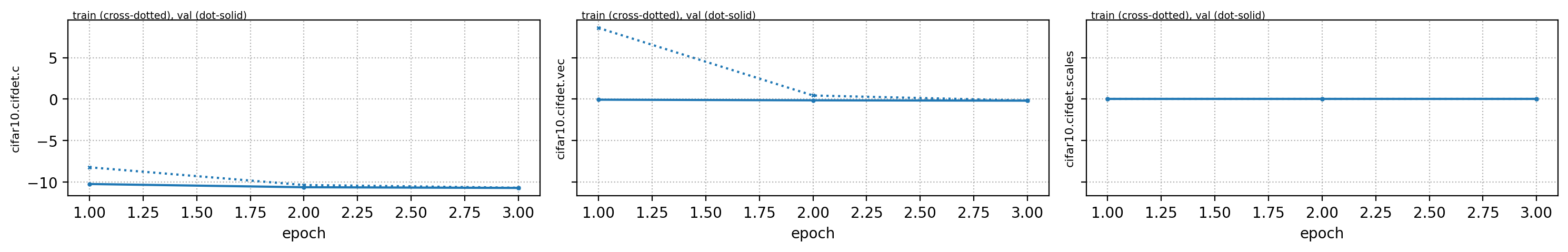

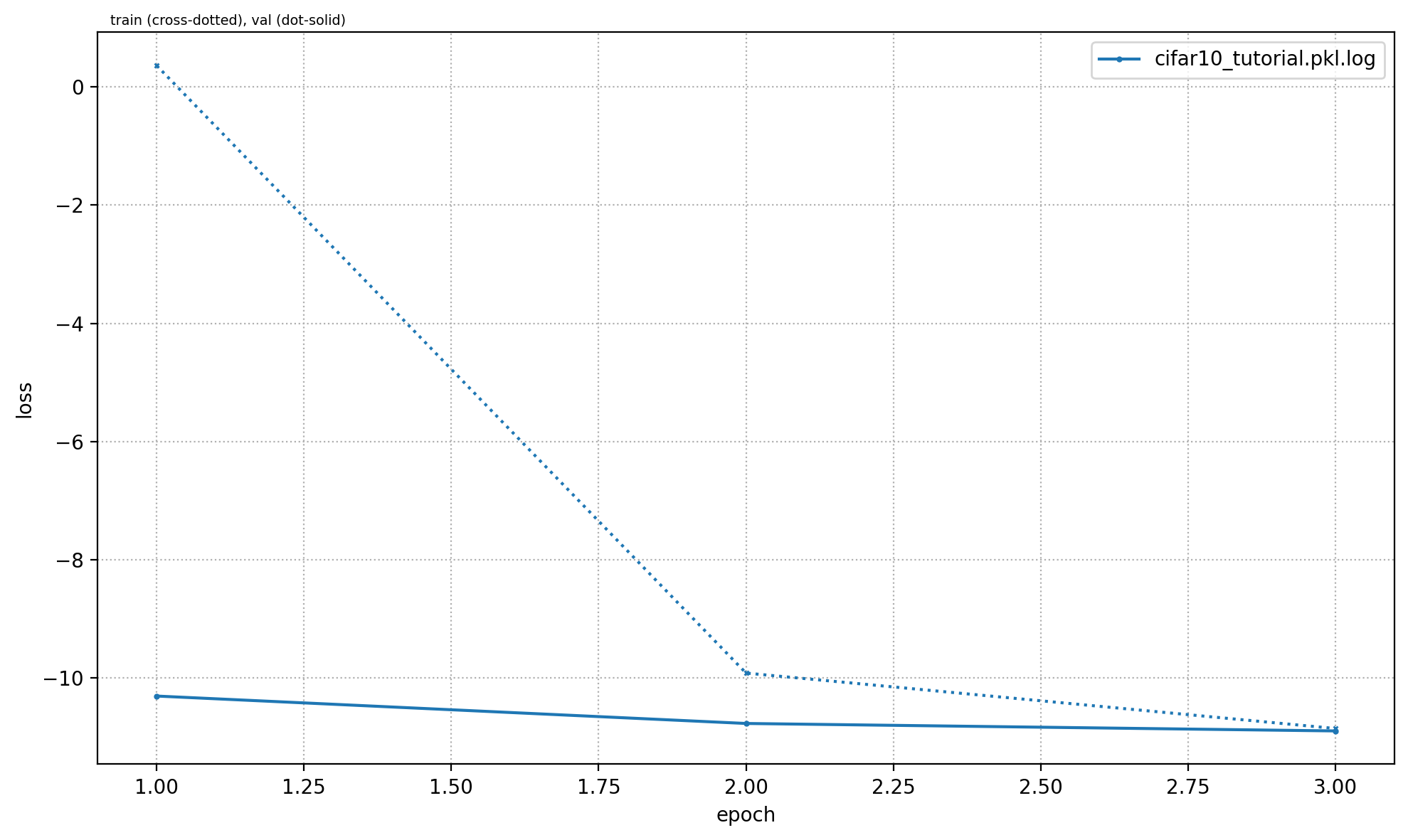

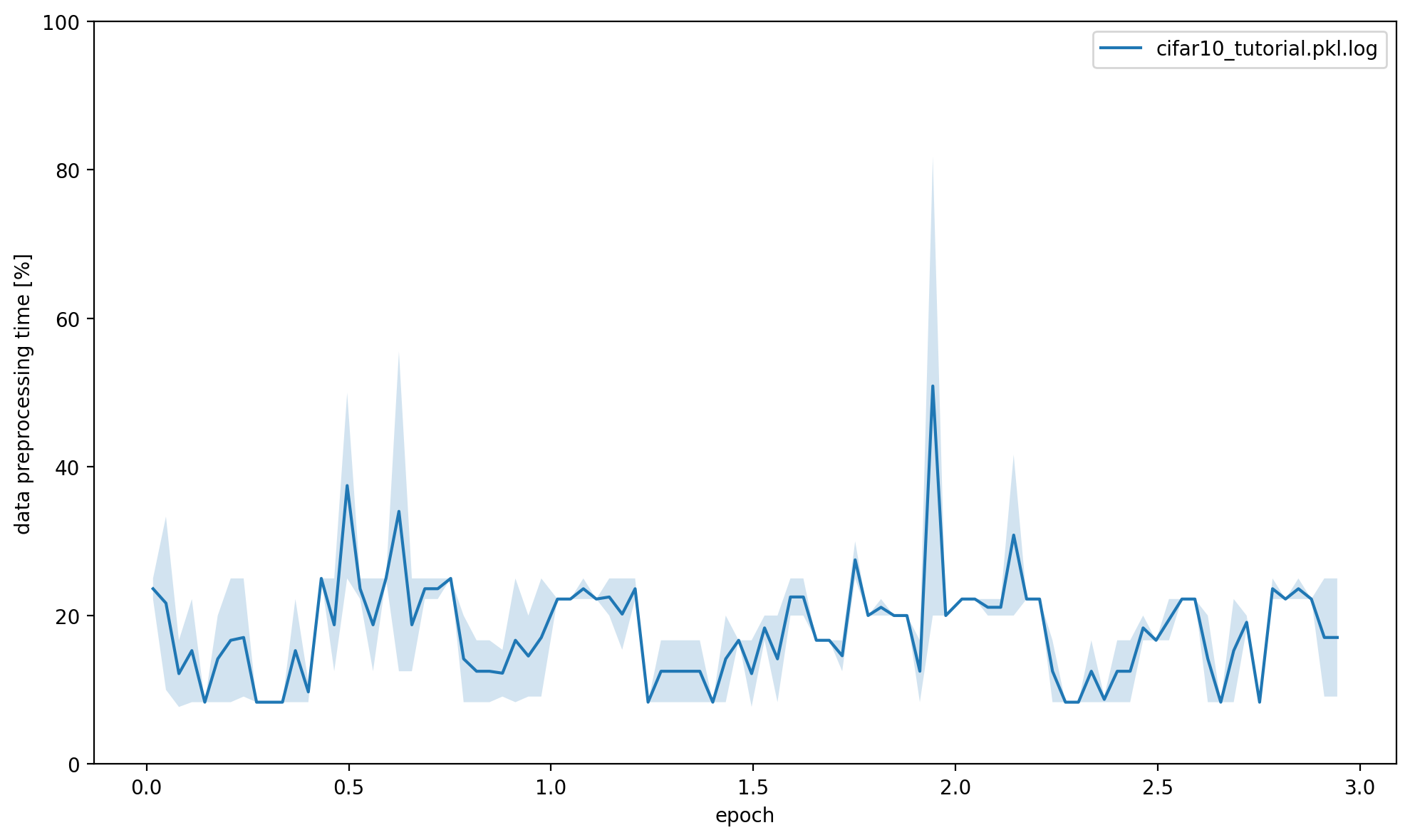

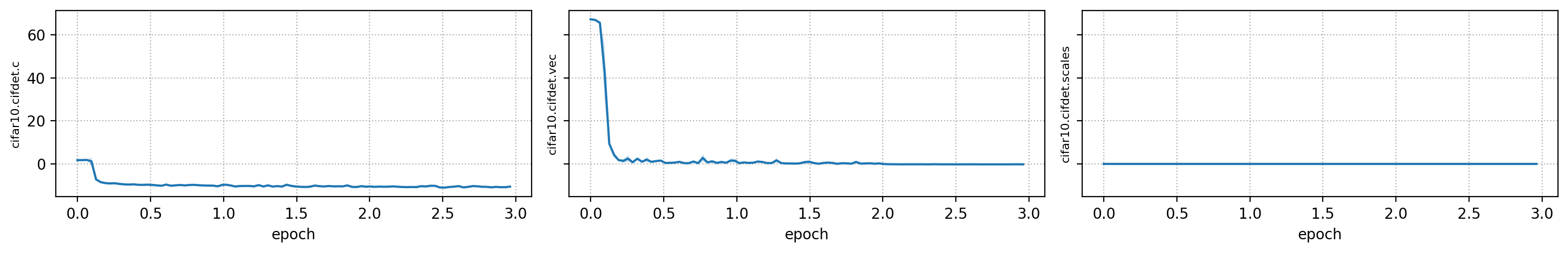

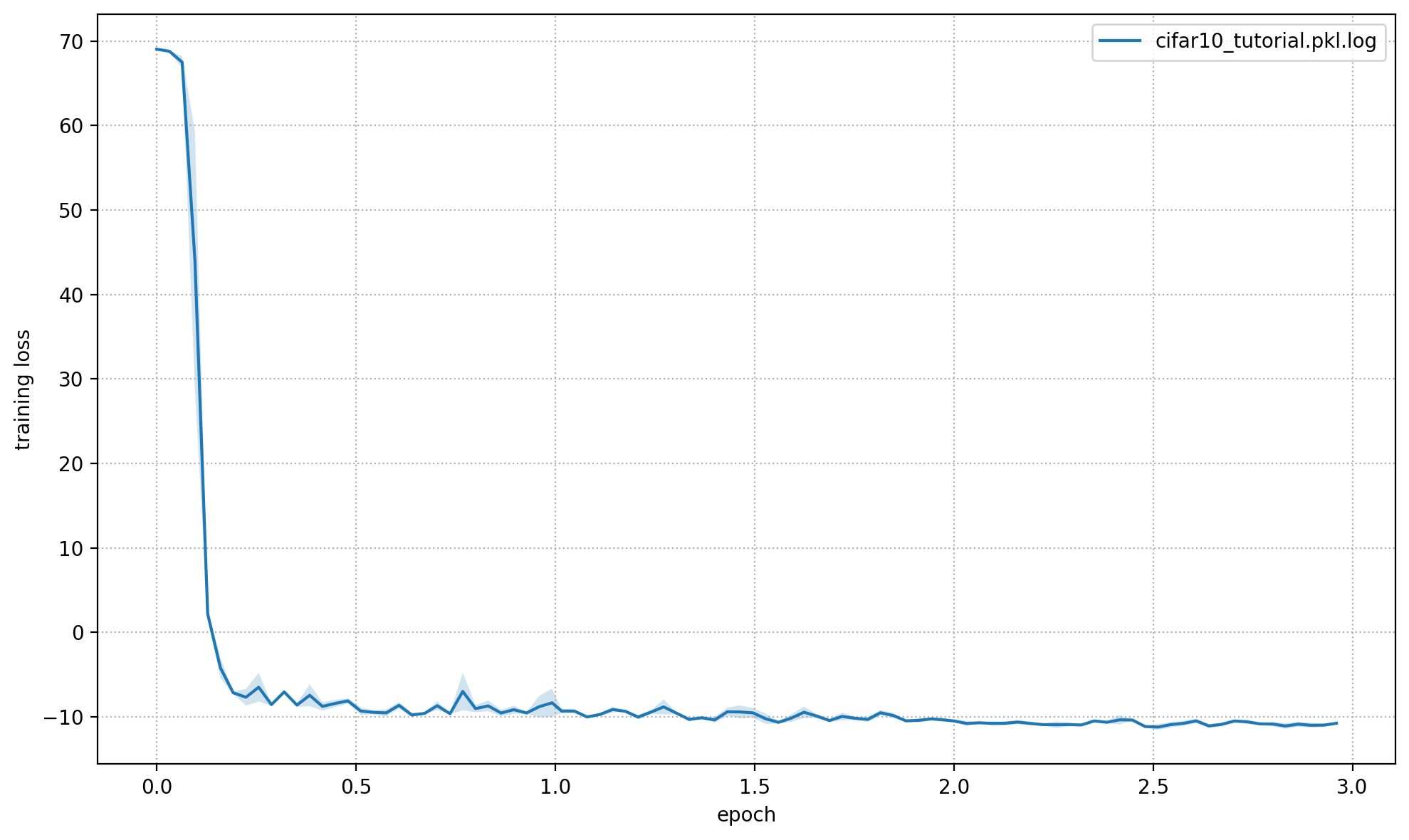

Plot Training Logs#

You can create a set of plots from the command line with python -m openpifpaf.logs cifar10_tutorial.pkl.log. You can also overlay multiple runs. Below we call the plotting code from that command directly to show the output in this notebook.

openpifpaf.logs.Plots(['cifar10_tutorial.pkl.log']).show_all()

{'cifar10_tutorial.pkl.log': ['--dataset=cifar10',

'--basenet=cifar10net',

'--log-interval=50',

'--epochs=3',

'--lr=0.0003',

'--momentum=0.95',

'--batch-size=16',

'--lr-warm-up-epochs=0.1',

'--lr-decay',

'2.0',

'2.5',

'--lr-decay-epochs=0.1',

'--loader-workers=2',

'--output=cifar10_tutorial.pkl']}

cifar10_tutorial.pkl.log: {'message': '', 'levelname': 'INFO', 'name': 'openpifpaf.network.trainer', 'asctime': '2024-03-14 13:39:53,940', 'type': 'train', 'epoch': 2, 'batch': 3100, 'n_batches': 3125, 'time': 0.012, 'data_time': 0.001, 'lr': 3e-06, 'loss': -10.853, 'head_losses': [-10.67, -0.183, 0.0]}

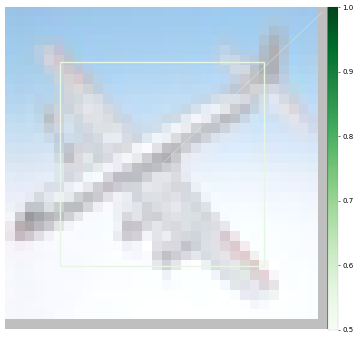

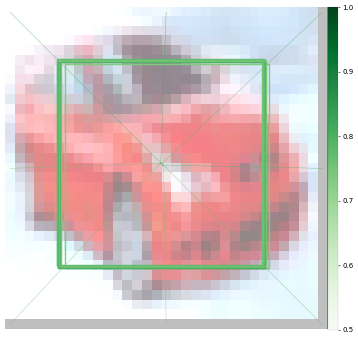

Prediction#

First using CLI:

%%bash

python -m openpifpaf.predict --checkpoint cifar10_tutorial.pkl.epoch003 images/cifar10_*.png --seed-threshold=0.1 --json-output . --quiet

WARNING:openpifpaf.decoder.cifcaf:consistency: decreasing keypoint threshold to seed threshold of 0.100000

%%bash

cat cifar10_*.json

[{"category_id": 1, "category": "plane", "score": 0.404, "bbox": [4.98, 4.97, 21.01, 20.99]}, {"category_id": 9, "category": "ship", "score": 0.37, "bbox": [5.04, 4.96, 21.0, 21.02]}, {"category_id": 3, "category": "bird", "score": 0.325, "bbox": [4.97, 5.0, 20.99, 21.02]}, {"category_id": 5, "category": "deer", "score": 0.25, "bbox": [5.02, 4.85, 21.02, 21.04]}, {"category_id": 4, "category": "cat", "score": 0.208, "bbox": [4.98, 4.98, 21.01, 21.03]}, {"category_id": 6, "category": "dog", "score": 0.196, "bbox": [4.94, 5.0, 21.1, 20.96]}, {"category_id": 10, "category": "truck", "score": 0.168, "bbox": [5.11, 5.07, 20.93, 20.94]}, {"category_id": 8, "category": "horse", "score": 0.16, "bbox": [5.03, 5.04, 21.01, 21.03]}][{"category_id": 2, "category": "car", "score": 0.498, "bbox": [5.49, 5.27, 20.95, 20.93]}, {"category_id": 10, "category": "truck", "score": 0.457, "bbox": [5.14, 5.16, 21.02, 20.99]}][{"category_id": 9, "category": "ship", "score": 0.387, "bbox": [5.04, 5.05, 20.99, 21.04]}, {"category_id": 1, "category": "plane", "score": 0.357, "bbox": [5.08, 5.03, 20.95, 21.03]}, {"category_id": 3, "category": "bird", "score": 0.289, "bbox": [5.05, 5.0, 21.06, 20.89]}, {"category_id": 10, "category": "truck", "score": 0.286, "bbox": [4.98, 4.96, 20.99, 21.0]}, {"category_id": 2, "category": "car", "score": 0.27, "bbox": [5.0, 5.03, 20.95, 21.01]}, {"category_id": 4, "category": "cat", "score": 0.226, "bbox": [5.0, 4.99, 21.03, 21.06]}, {"category_id": 8, "category": "horse", "score": 0.207, "bbox": [5.02, 4.99, 20.96, 21.01]}, {"category_id": 5, "category": "deer", "score": 0.201, "bbox": [4.86, 4.96, 20.87, 21.07]}, {"category_id": 6, "category": "dog", "score": 0.196, "bbox": [4.94, 5.01, 21.12, 21.0]}, {"category_id": 7, "category": "frog", "score": 0.154, "bbox": [4.97, 4.96, 20.96, 21.07]}][{"category_id": 10, "category": "truck", "score": 0.381, "bbox": [5.01, 4.93, 21.0, 20.92]}, {"category_id": 8, "category": "horse", "score": 0.316, "bbox": [5.22, 4.9, 20.98, 21.07]}, {"category_id": 2, "category": "car", "score": 0.302, "bbox": [4.91, 4.95, 21.02, 21.04]}, {"category_id": 4, "category": "cat", "score": 0.279, "bbox": [4.84, 5.05, 20.99, 20.95]}, {"category_id": 9, "category": "ship", "score": 0.263, "bbox": [4.99, 5.0, 21.0, 21.01]}, {"category_id": 1, "category": "plane", "score": 0.243, "bbox": [4.95, 4.92, 20.99, 21.01]}, {"category_id": 6, "category": "dog", "score": 0.234, "bbox": [4.9, 4.93, 20.95, 20.96]}, {"category_id": 7, "category": "frog", "score": 0.202, "bbox": [4.89, 5.05, 21.05, 20.91]}, {"category_id": 3, "category": "bird", "score": 0.2, "bbox": [4.98, 4.9, 21.03, 21.06]}, {"category_id": 5, "category": "deer", "score": 0.195, "bbox": [4.91, 5.07, 21.04, 20.92]}]

Using API:

net_cpu, _ = openpifpaf.network.Factory(checkpoint='cifar10_tutorial.pkl.epoch003').factory()

preprocess = openpifpaf.transforms.Compose([

openpifpaf.transforms.NormalizeAnnotations(),

openpifpaf.transforms.CenterPadTight(16),

openpifpaf.transforms.EVAL_TRANSFORM,

])

openpifpaf.decoder.utils.CifDetSeeds.set_threshold(0.3)

decode = openpifpaf.decoder.factory([hn.meta for hn in net_cpu.head_nets])

data = openpifpaf.datasets.ImageList([

'images/cifar10_airplane4.png',

'images/cifar10_automobile10.png',

'images/cifar10_ship7.png',

'images/cifar10_truck8.png',

], preprocess=preprocess)

for image, _, meta in data:

predictions = decode.batch(net_cpu, image.unsqueeze(0))[0]

print(['{} {:.0%}'.format(pred.category, pred.score) for pred in predictions])

['plane 40%', 'ship 37%', 'bird 33%']

['car 50%', 'truck 46%']

['ship 39%', 'plane 36%']

['truck 38%', 'horse 32%', 'car 30%']

Evaluation#

I selected the above images, because their category is clear to me. There are images in cifar10 where it is more difficult to tell what the category is and so it is probably also more difficult for a neural network.

Therefore, we should run a proper quantitative evaluation with openpifpaf.eval. It stores its output as a json file, so we print that afterwards.

%%bash

python -m openpifpaf.eval --checkpoint cifar10_tutorial.pkl.epoch003 --dataset=cifar10 --seed-threshold=0.1 --instance-threshold=0.1 --quiet

WARNING:openpifpaf.decoder.cifcaf:consistency: decreasing keypoint threshold to seed threshold of 0.100000

[INFO] Register count_convNd() for <class 'torch.nn.modules.conv.Conv2d'>.

%%bash

python -m json.tool cifar10_tutorial.pkl.epoch003.eval-cifar10.stats.json

{

"text_labels": [

"total",

"plane",

"car",

"bird",

"cat",

"deer",

"dog",

"frog",

"horse",

"ship",

"truck"

],

"stats": [

0.4459,

0.509,

0.669,

0.215,

0.33,

0.359,

0.315,

0.47,

0.549,

0.579,

0.464

],

"args": [

"/opt/hostedtoolcache/Python/3.10.13/x64/lib/python3.10/site-packages/openpifpaf/eval.py",

"--checkpoint",

"cifar10_tutorial.pkl.epoch003",

"--dataset=cifar10",

"--seed-threshold=0.1",

"--instance-threshold=0.1",

"--quiet"

],

"version": "0.14.2+1.g9ac6c0f",

"dataset": "cifar10",

"total_time": 23.20525859700001,

"checkpoint": "cifar10_tutorial.pkl.epoch003",

"count_ops": [

421736880.0,

105180.0

],

"file_size": 438894,

"n_images": 10000,

"decoder_time": 7.654343058004315,

"nn_time": 6.4898230500089085

}

We see that some categories like “plane”, “car” and “ship” are learned quickly whereas as others are learned poorly (e.g. “bird”). The poor performance is not surprising as we trained our network for a few epochs only.